Please click + icon for more information

Energy-harvesting devices efficiently and effectively capture, accumulate, store, condition and manage conventional energy and supply it in a form that can be used to perform a helpful task. An Energy Harvesting Module is an electronic device that can perform all these functions to power a variety of sensor and control circuitry for intermittent duty applications. Advanced technical developments have increased the efficiency of devices in capturing trace amounts of energy from the environment and transforming them into electrical energy. Also advancements in microprocessor technology have increased power efficiency, effectively reducing power consumption requirements. In combination, these developments have sparked interest in the engineering community to develop more and more applications that utilize energy harvesting for power. Many real life applications are using energy harvesting system now a day. Wireless sensor network systems such as Zig-Bee systems often benefit from energy harvesting power sources. For example, when a wireless node is deployed at a remote site where a wall plug or a battery is either unreliable or unavailable, energy harvesting can augment or supply power. In another example, a remote control node running on energy harvesting can be implemented as a self-powered electronic system.

And in yet other situations, multiple energy sources can be used to enhance the overall efficiency and reliability of any system.

EH Ideal for IoT & Wireless Networks Power Hunger

The Internet of Things (IoT) consists of sensors and smart things/objects that are connected to the internet anytime and anywhere. Acting as a perception layer of IoT, wireless sensor networks (WSNs) play an important role by detecting events and collecting surrounding context and environment information. Since sensors are battery powered, replacing the batteries in each smart object/thing/sensor is very difficult to implement.

For the sustainability of network operations, energy harvesting and energy management technologies have recently gained much attention. Energy management is the most important technology for prolonging the network lifetime of WSNs. The design of efficient energy management involves several aspects, including physical, MAC and network elements as well as application aspects.

Energy harvesting is a novel and promising solution that sees each node equipped with a harvesting module. Recent research efforts in energy harvesting can be classified into two categories: energy scavenging and energy transferring. In energy scavenging technologies, the sensor can be recharged from the ambient environment, including electromagnetic waves, thermal energy and wind energy. In energy transferring technologies, the mobile node can play the role of charger which is able to wirelessly transfer energy to those sensors located within its recharging range.

Low-power wearable may soon bid to batteries and start drawing energy generated by body heat and movement, and ambient energy from the environment. Consumer electronics devices are getting smaller but conventional batteries are not, and it’s important to start implementing new energy harvesting techniques to keep devices powered for long periods of time. There will be billions of Internet-connected devices supplying real-time information in the coming year. Data-gathering instruments today are designed around the size of batteries, and self-powered devices could resolve some power and size issues.

Approximate computing is a promising approach to energy-efficient design of digital systems. Approximate computing relies on the ability of many systems and applications to tolerate some loss of quality or optimality in the computed result.

“As rising performance demands confront with plateauing resource budgets, approximate computing (AC) has become, not merely attractive, but even imperative. AC is based on the intuitive observation that while performing exact computation or maintaining peak-level service demand require high amount of resources, allowing selective approximation or occasional violation of the specification can provide disproportionate gains in efficiency. AC leverages the presence of error-tolerant code regions and perceptual limitations of users to trade-off implementation, storage and result accuracy for performance and energy gains. Thus, AC has the potential to benefit a wide range of applications/frameworks e.g. data analytics, scientific computing, multimedia and signal processing, machine learning and MapReduce, etc. This survey paper reviews techniques for approximate computing in CPU, GPU and FPGA and various processor components (e.g. cache, main memory), along with approximate storage in SRAM, DRAM/eDRAM, non-volatile memories, e.g. Flash, STT-RAM, etc.”

The rapid development of mobile applications and Internet-of-things (IoT) paradigm-based applications

has brought several challenges to the development of cloud-based solutions.

These challenges are mainly due to i) the transfer of huge amounts of data to the cloud,

ii) high communication latencies and iii) the inability of this model to respond in some domains that require a rapid reaction to events.

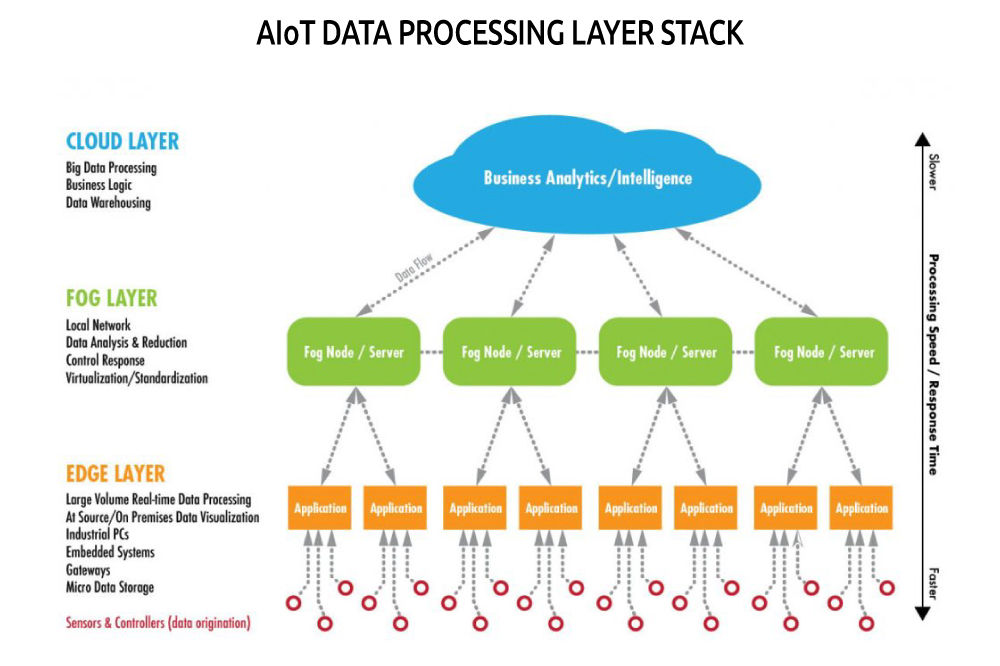

Increased awareness in industrial environments such as the artificial intelligenceInternet of Things (AIoT) has led organizations to rethink their infrastructures by adopting cloud, fog, and edge computing architectures. These architectures allow them to take advantage of a multitude of computing and data storage resources.

Cloud, fog, and edge computing may look very similar terms, but they have some differences, functioning as different layers on the AIoT horizon that complement each other.

Cloud computing

Cloud computing can be defined as a model for the provision and use of Information and Communication Technologies, which allows remote access over the internet to a range of shared computing resources in the form of services.

This computer system can be divided into two parts: frontend (client devices) and backend (servers); while the models for computing service delivery are divided into three: Infrastructure-as-a-Service (IaaS); “Platform-as-a-Service (PaaS); and Software-as-a-Service (SaaS).

Adopting these architectures brings benefits to organizations, from performance, availability, scalability, communication between IoT sensors and processing systems, and increased storage and processing capabilities.

Fog computing

In turn, fog computing was a term coined by Cisco in 2014 as a means of bringing cloud computing capabilities closer to the network. It is a decentralized computing architecture where data, communications, storage, and applications are distributed between the data source and the cloud. That is, it is a horizontal architecture that shares resources and services stored anywhere in the cloud to Internet of Things devices.

In a very brief and simplified way, fog computing will be the fog layer below the cloud layer, managing the connections between the cloud and the network edge.

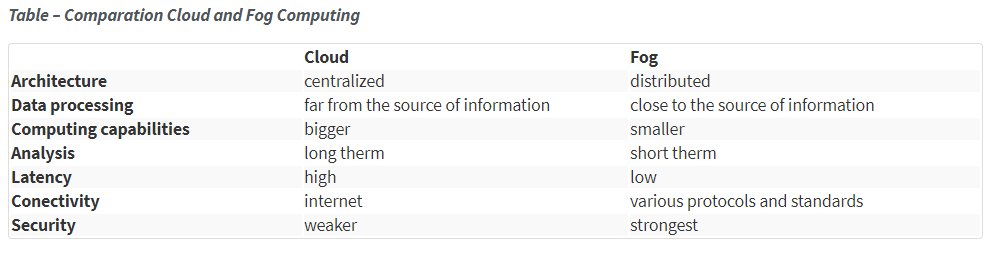

The big difference between fog computing and cloud computing is that it is a centralized system while the former is a distributed decentralized infrastructure. In the following table, a comparison is presented.

Edge computing

Edge computing is on the “border” of the network. This architecture can bring data source processing even closer without having to be sent to a remote cloud or other centralized systems for processing. This improves the speed and performance of data transport as well as devices and applications

by eliminating the distance and time to send data to centralized sources.

The main difference between edge computing and fog computing lies in where the processing takes place. Edge computing occurs directly on the devices where the sensors are placed,or on a gateway that is physically close to the sensors.

The advantages of edge computing then lie in optimizing the connection and improving response time. Security is also enhanced, with data encryption occurring closer to the core of the network.

However, edge computing has a more limited scope of action, referring to individual and predefined instances of processing that occur at network ends.

In practice, fog computing always uses edge computing, a solution that complements cloud computing. However, edge computing may or may not use fog computing. Also, fog computing typically includes the cloud, while the edge does not.